Music games are a dime a dozen in app stores and go all the way back to the first Parappa or Beatmania. There have been dozens of iterations on how to do the genre justice with gem smashers in Rock Band to obstacle courses in Vib Ribbon to omnidirectional commands in Guitaroo Man. They all have something in common; the method to get those punchy note placements to land right on beat. What you might not know is the process to make that happen.

Music games can pretty simply be defined as a music track with a game mechanism that asks the player to provide input that corresponds to features in the music. That could be beat, lyrics, or abstractions of the notation. The method to get the note placement is what varies from game to game. There are three primary ways that these games achieve that: manual placement, MIDI derived placement, and procedural analysis of audio.

Games like Beat Saber and Guitar Hero use manual placement of notes. They usually employ a handful of specialists that will listen to the audio and make sure the music abstraction “feels” right. The process is like level design, though a bit more simplified.

The second method is MIDI derived. Many of the piano-type games that can be found in various app stores use this method to quickly generate accurate “notes.” Beatmania also used a similar method.

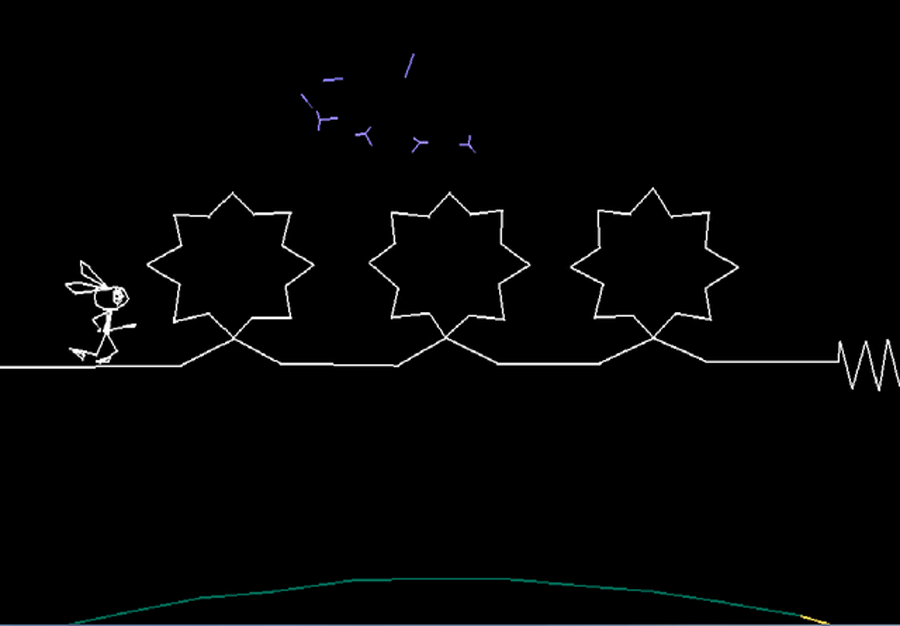

The last method is easily the least understood. Programmatic or procedural generation uses a series of algorithms to derive synced “notes” that correspond with the waveform. Games like Audiosurf use a proprietary set of analyses that parse a music file’s waveform and reduce it down to a series of onsets. You might notice the difference in quality, or rather the trade-off between something like Audiosurf and Beat Saber. Audiosurf lets the user upload any file of their choosing, but it's up to a machine to try to suss out what might be called notes. The quality of that output is largely dependent on the quality of the track and how well isolated different voices, or instruments are within the waveform.

We, at Sonic Bloom, are constantly asked if we can optimize Koreographer to create a system that can “detect” notes for a piece of music. Though it supports rudimentary FFT and RMS analysis, we always prefer manual or MIDI-derived markup. The difficulty with automated systems is that there is no magic set of algorithms that will always produce a perfect result. The reason for this is that even though a human can hear distinct instruments and lyrics, a computer sees a garbled mess of waveforms phasing across each other, and constantly changing frequency and amplitude. To pull out a distinct “voice” within a mastered audio file often requires specific parameters tailored to each song, or a heavily trained machine learning algorithm that does just that. The most reliable way of getting an acceptable output from an algorithm is by isolating the “voice” you want. Fortunately, big brains in MIR (music information retrieval research) have built amazing systems that can pluck a vocal track or guitar track directly from a mastered piece of audio with minimal loss of quality. These tracks can then be passed over by an algorithm built specifically for that instrument type. Essentially, creating a stem track from a master.

Creating stems from a music track simplifies the problem immensely. What would normally be a voice buried in lots of competing sound can now be simply read as an isolated waveform with distinct notes. You might say “the problem is solved then” and “why aren’t there more games like Audiosuft that let me upload any song I want?” The answer is computational cost and complexity. A system that will always get great results from any song is an extremely complex system that adds loads of development time to a game. You might need a semi-intelligent system to first isolate each instrument voice, then run a series of algorithms that detect onsets, notes, and words, and then choose from the results that work best. Such a system could yield the results everyone wants, but at computational costs that could seriously impact loading times. Once you start understanding the cost to correctly do an automated system, the reason for it still missing from games becomes apparent.

This is why games like Beat Saber and Guitar Hero employ small armies of golden eared conservatory grads to carefully map out the progression of notes. This is especially important for a genre of game that cares so much about the “feel” of playing like a music maestro. So, next time you throw on a VR headset and start drumming in the air to a neon obstacle course, think of the man-hours pumped into that experience to give you that amazing feeling experience.